Unofficial Geometry Script & DynamicMeshComponent FAQ

/Geometry Script(ing) is a Blueprint/Python (UFunction) library first released in Unreal Engine 5.0 that allows users to query and manipulate triangle meshes (and a few other geometric data types). I initially developed Geometry Script based on some previous public experiments I published on this website, specifically Mesh Generation and Editing at Runtime and Procedural Mesh Blueprints.

At time of writing, Geometry Script is an Experimental feature plugin in UE 5.1, which means it has pretty minimal documentation and learning materials. I have published a short series of tutorial videos on YouTube demonstrating how to use Geometry Script Blueprints for various tasks, see the playlist here. Geometry Script was also used heavily in the level design of the UE5 Lyra sample game, see documentation here.

As the main developer of Geometry Script, I get a lot of questions about how to use it, what it can do, etc. A lot of the same questions. So this page is (hopefully) a living document that I will update over time. Geometry Script is used primarily to modify UDynamicMesh objects, and the main way you access or render a UDynamicMesh is via DynamicMeshComponent / DynamicMeshActor. So, this FAQ will also cover some aspects of DynamicMeshComponent that frequently come up in the context of Geometry Scripting.

If you have questions this FAQ doesn’t answer, you might try posting on the Unreal Developer Community forums (https://dev.epicgames.com/community/), asking in the #geometry-scripting channel on the UnrealSlackers Discord ( https://unrealslackers.org ), or @ me on Mastodon (https://mastodon.gamedev.place/@rms80), or (still) Twitter (https://twitter.com/rms80). Note, however, that I strongly prefer to answer questions in public rather than in private/DM.

(Mandatory Disclaimer: your author, Ryan Schmidt, is an employee of Epic Games. However, gradientspace.com is his personal website and this article represents his personal thoughts and opinions. About triangles.)

Contents

(note: sorry, none of these are linked yet - soon!)

Basics

Is there any documentation for Geometry Script at all?

Does Geometry Script have a function for X?

Is there a published Roadmap for Geometry Script?

None of these Geometry Script functions show up for me in the Blueprint Editor

Some functions are missing when I try to use them in my Actor Blueprint

I can’t find the function “Event On Rebuild Generated Mesh” to override in my Actor Blueprint

Does Geometry Script always run on the Game Thread?

Can I run GeometryScript functions in a background thread or asynchronously?

Is there any built-in support for running Geometry Script functions asynchronously?

Can I run a Geometry Script Blueprint on the GPU?

Does Geometry Script work with Skeletal Meshes?

Does Geometry Script work with Landscape, Geometry Caches, Geometry Collections, Hair/Grooms, Cloth Meshes, or some other UE Geometry Representation?Is Geometry Script Deterministic? Are scripts likely to break in the future?

Can I animate a mesh with Geometry Scripting? Can I implement my own skinning or vertex deformation?

Runtime Usage

Can I use Geometry Script in my Game / At Runtime?

Should I use DynamicMeshActors generated with Geometry Script in my Game?

Will DynamicMeshComponent be as efficient as StaticMeshComponent in my Game?

Why are all my GeneratedDynamicMeshActors disappearing in PIE or my built game ?!?

Is GeometryScript function X fast enough to use in my game?

How can I save/load Dynamic Meshes at Runtime?

Can I use Geometry Script to modify Static Meshes in my Level at Runtime?

The function “Copy Mesh From Static Mesh” is not working at Runtime

The Mesh I get when using “Copy Mesh From Static Mesh” at Runtime is different than the mesh I get in the Editor

The functions “Apply Displace from Texture Map” and/or “Sample Texture2D at UV Positions” are working in the Editor but not at Runtime

Rendering and UE Features

Does DynamicMeshComponent support Nanite, Lumen, or Mesh Distance Fields?

Does DynamicMeshComponent work with Runtime Virtual Texturing (RVT)?

Does DynamicMeshComponent support Physics / Collision?

DynamicMeshComponents don’t show up in Collision Debug Views!

Does DynamicMeshComponent support LODs?

Does DynamicMeshComponent support Instanced Rendering?

Lyra Sample Game

How does the Non-Destructive Level Design with GeometryScript-based mesh generator “Tool” objects work in Lyra?

How can I migrate the Lyra Tool system to my own project?

Basics

Is there any documentation for Geometry Script at all?

Yes! Here is a direct link into the UE5 documentation: https://docs.unrealengine.com/5.1/en-US/geometry-script-users-guide .

Several livestream and tutorial sessions have also been recorded. At UnrealFest 2022, the Introduction to Geometry Scripting session demonstrated how to create various Editor Utilities with Geometry Script, and during the Modeling and Geometry Scripting in UE: Past, Present, and Future session I gave a brief demo and some high-level context around Geometry Script. Earlier in 2022, I participated in an Inside Unreal livestream where I did some Geometry Scripting demos.

Does Geometry Script have a function for X?

This is often a difficult question to answer without more information. However, a relatively complete reference for all the current Geometry Script library functions is available in the UE5 documentation here: https://docs.unrealengine.com/5.1/en-US/geometry-script-reference-in-unreal-engine

Is there a published Roadmap for Geometry Script?

Currently there is not. Geometry Script is being actively developed and the most effective way to see what is coming in the next UE5 Release is to look at what commits have been made in the UE5 Main branch in the Unreal Engine Github. Here is a direct link to the Geometry Script plugin history: https://github.com/EpicGames/UnrealEngine/commits/ue5-main/Engine/Plugins/Experimental/GeometryScripting. Note that this link will not work unless you are logged into GitHub with an account that has access to Unreal Engine, which requires you to sign up for an Epic Games account (more information here).

None of these Geometry Script functions show up for me in the Blueprint Editor

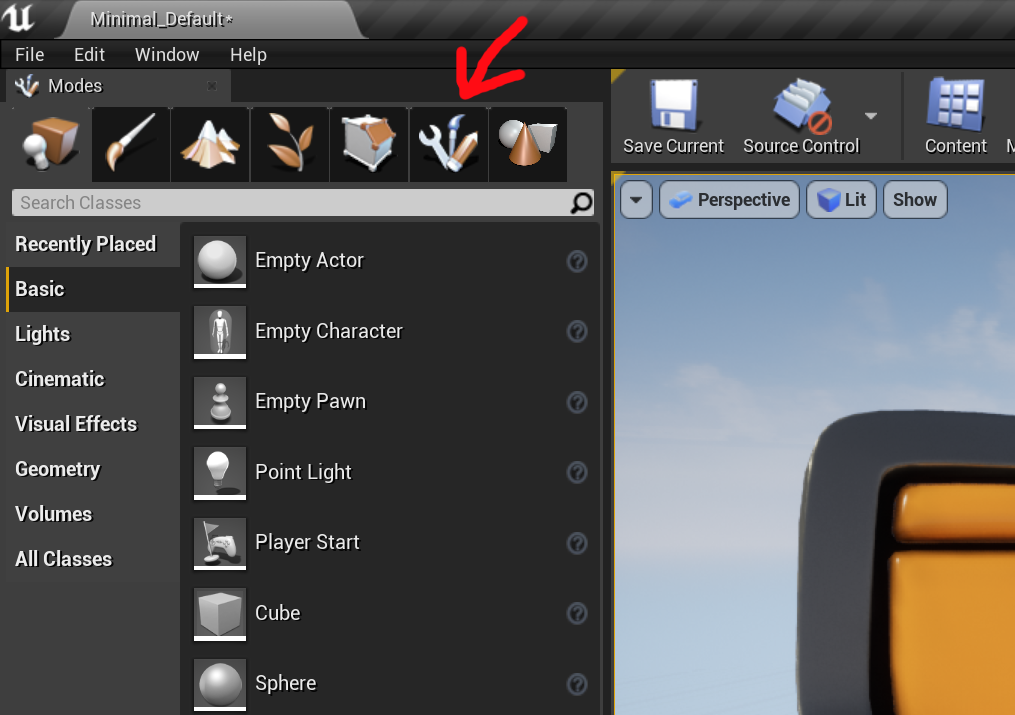

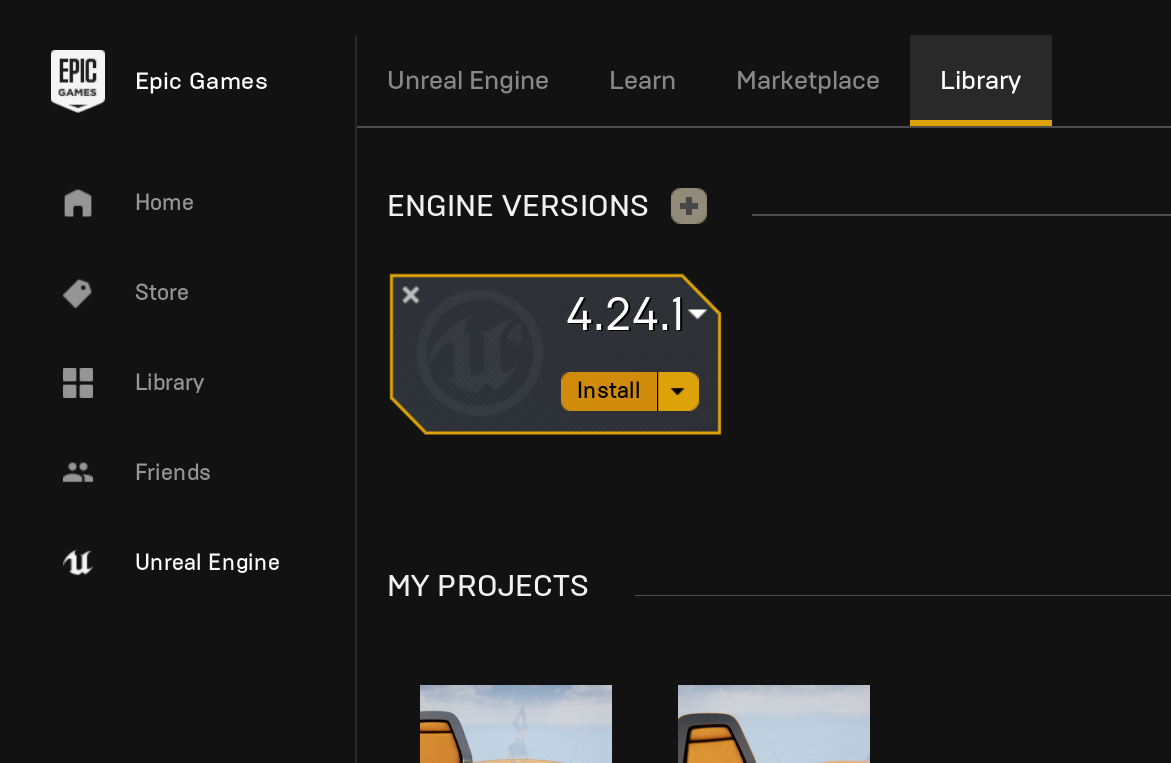

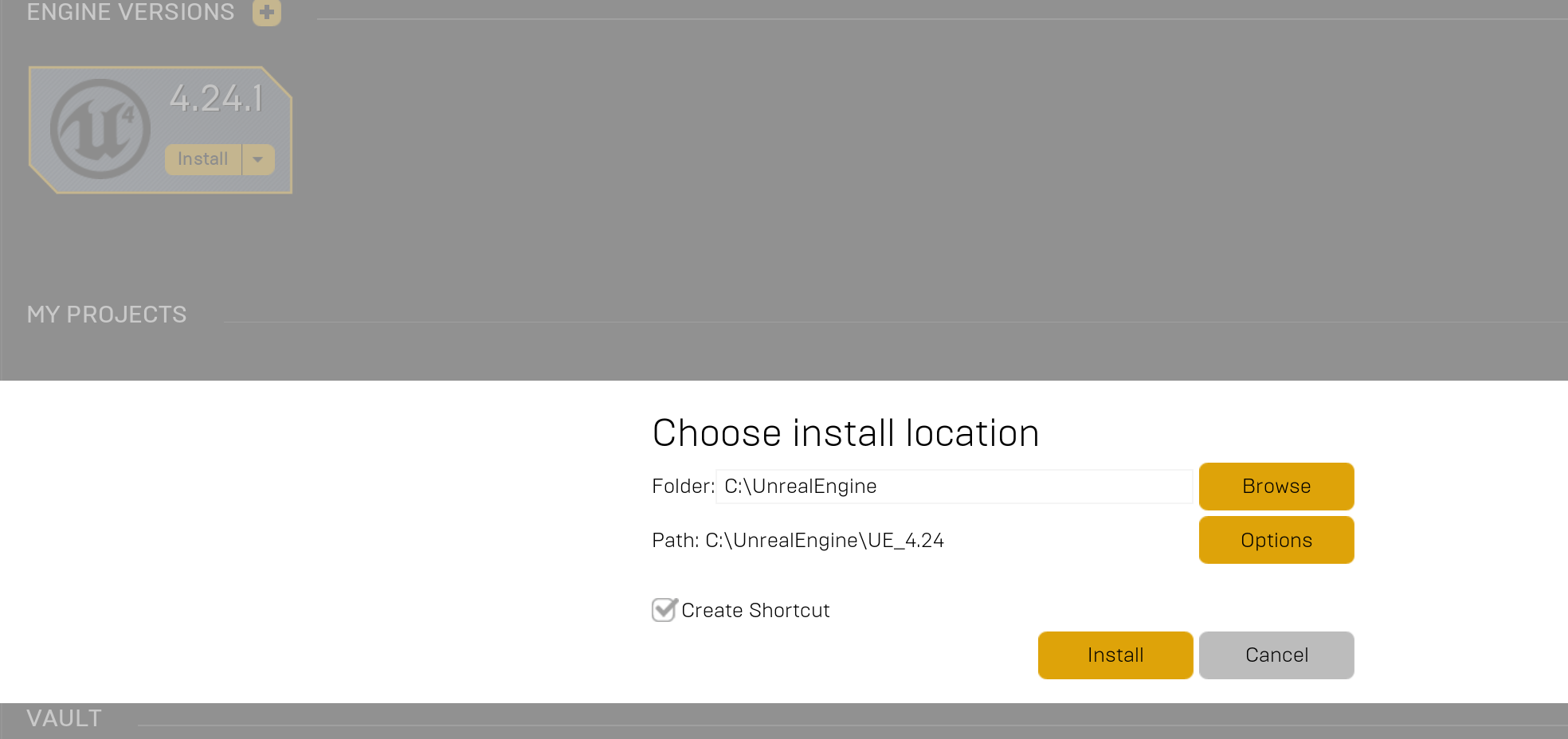

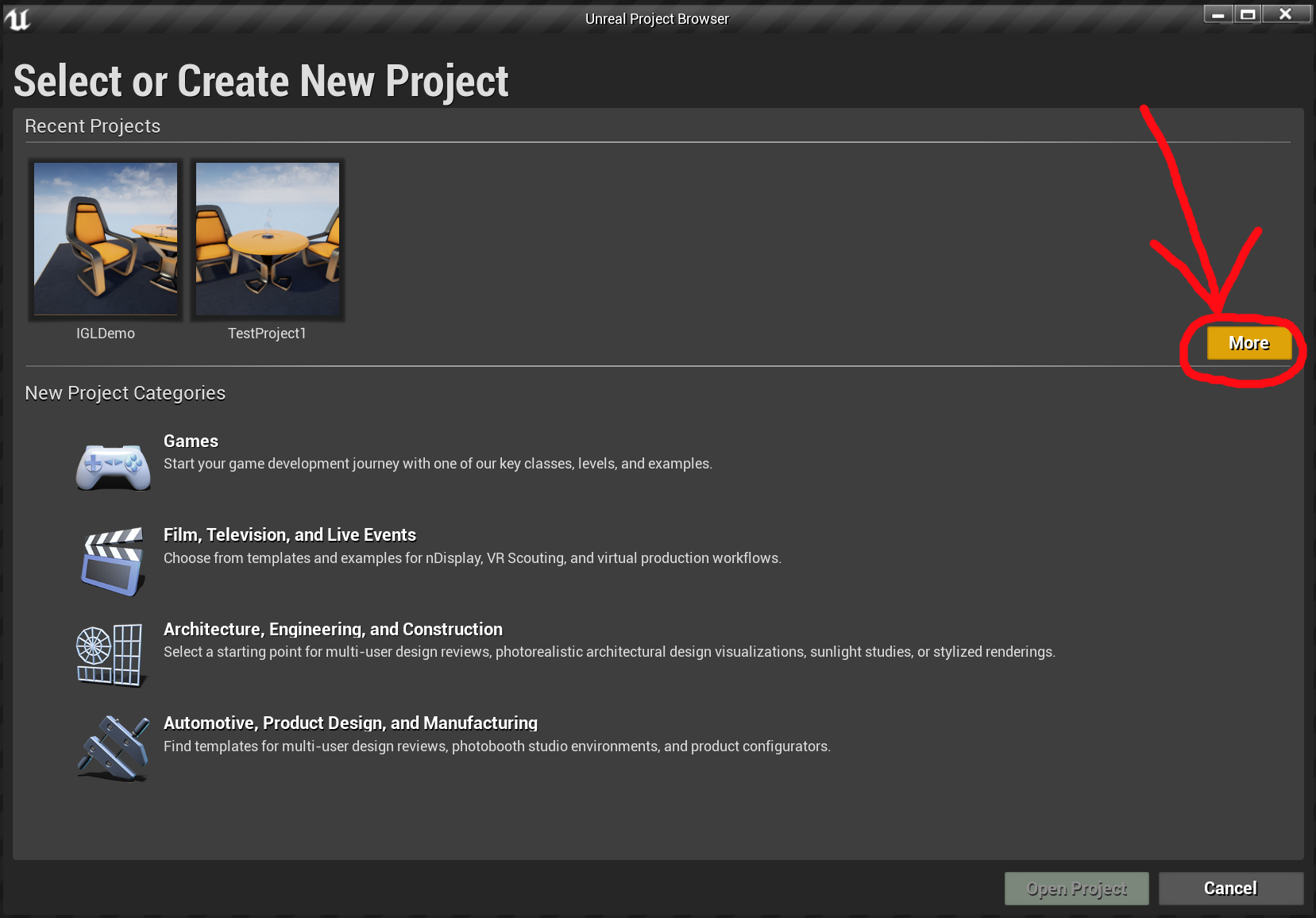

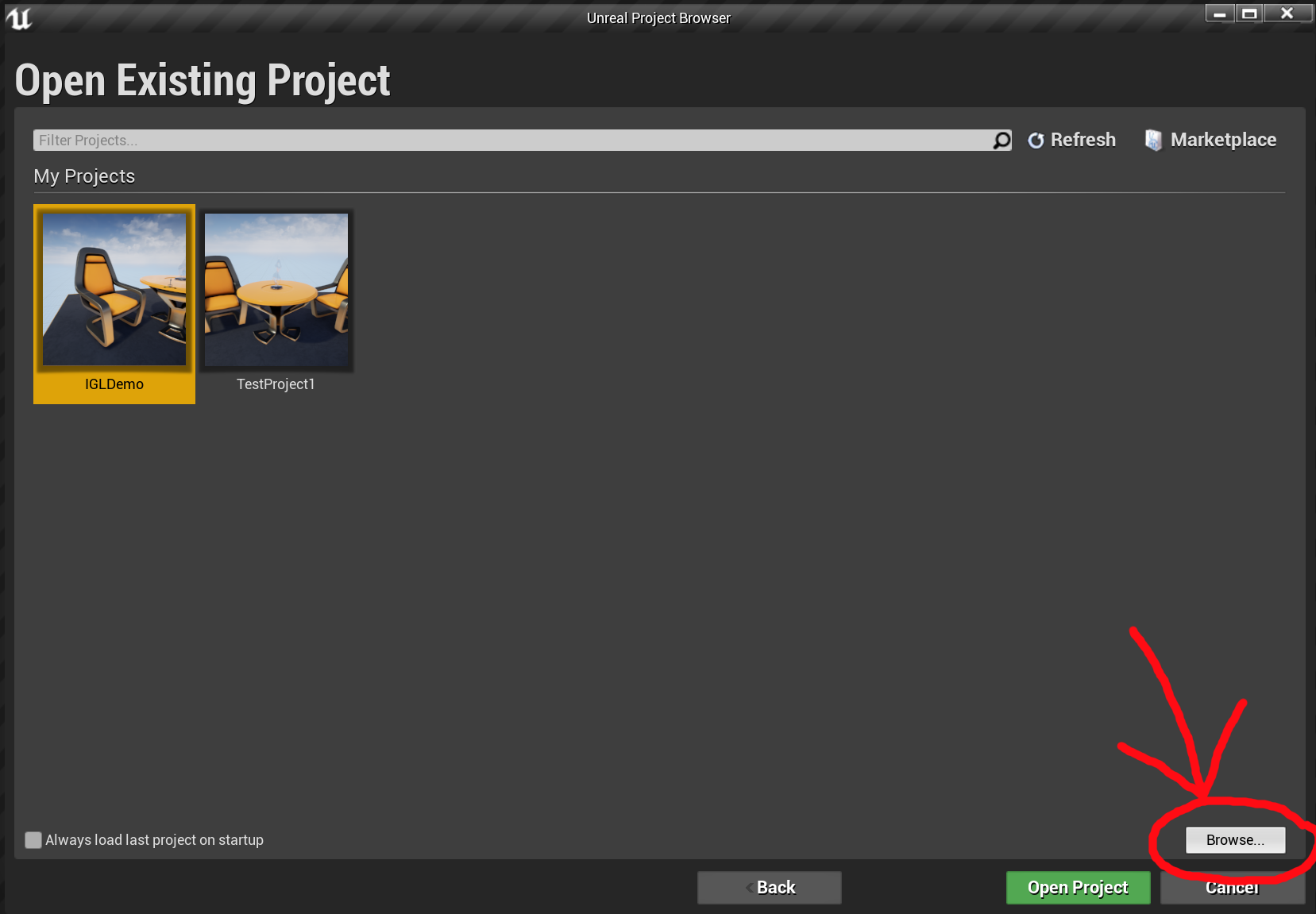

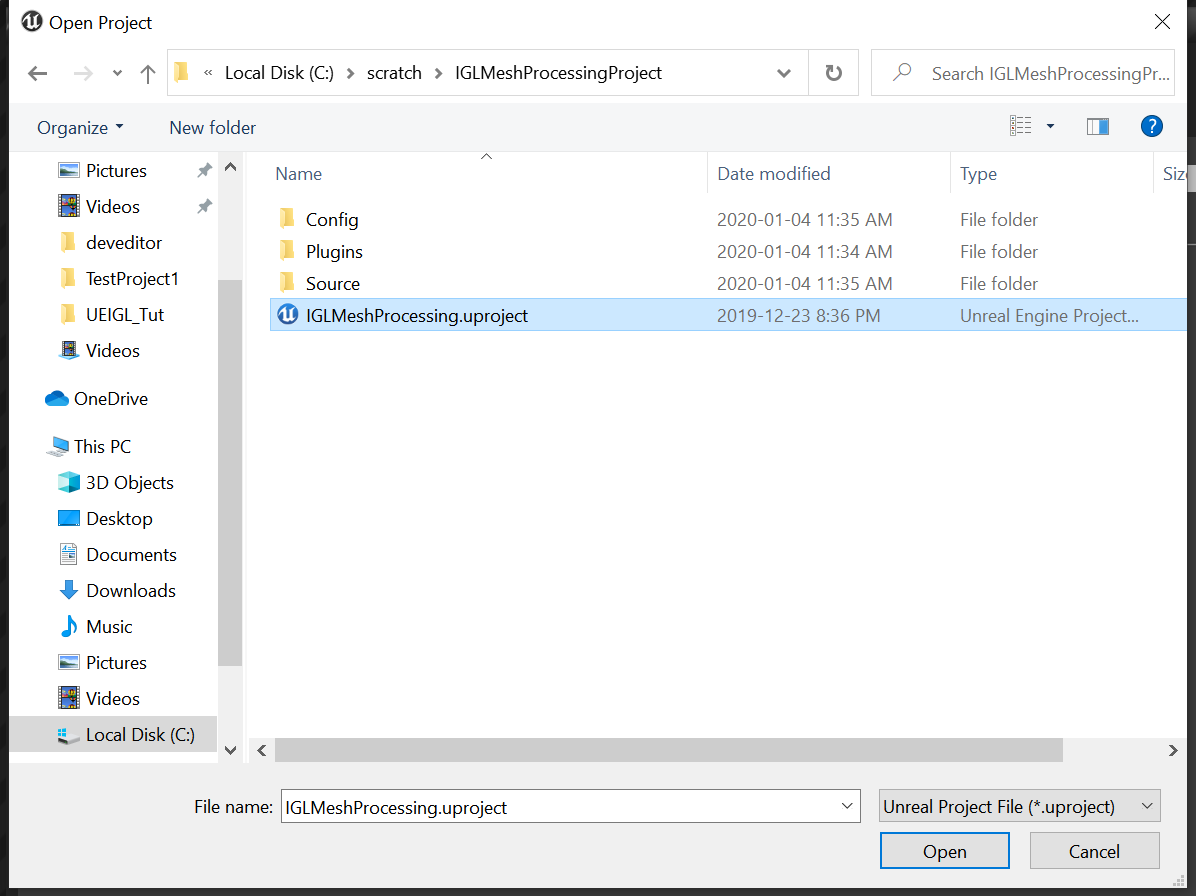

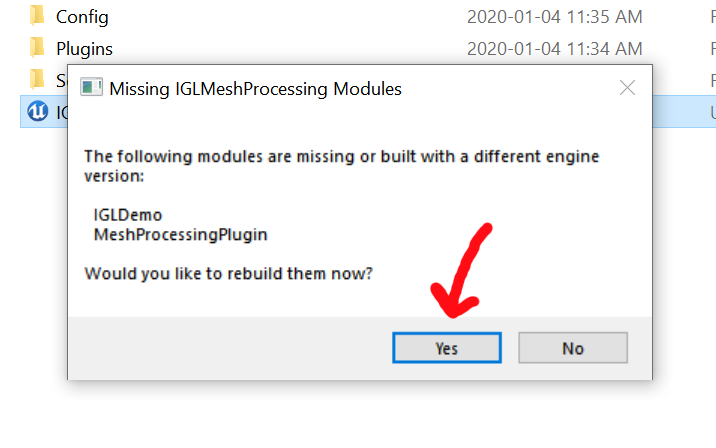

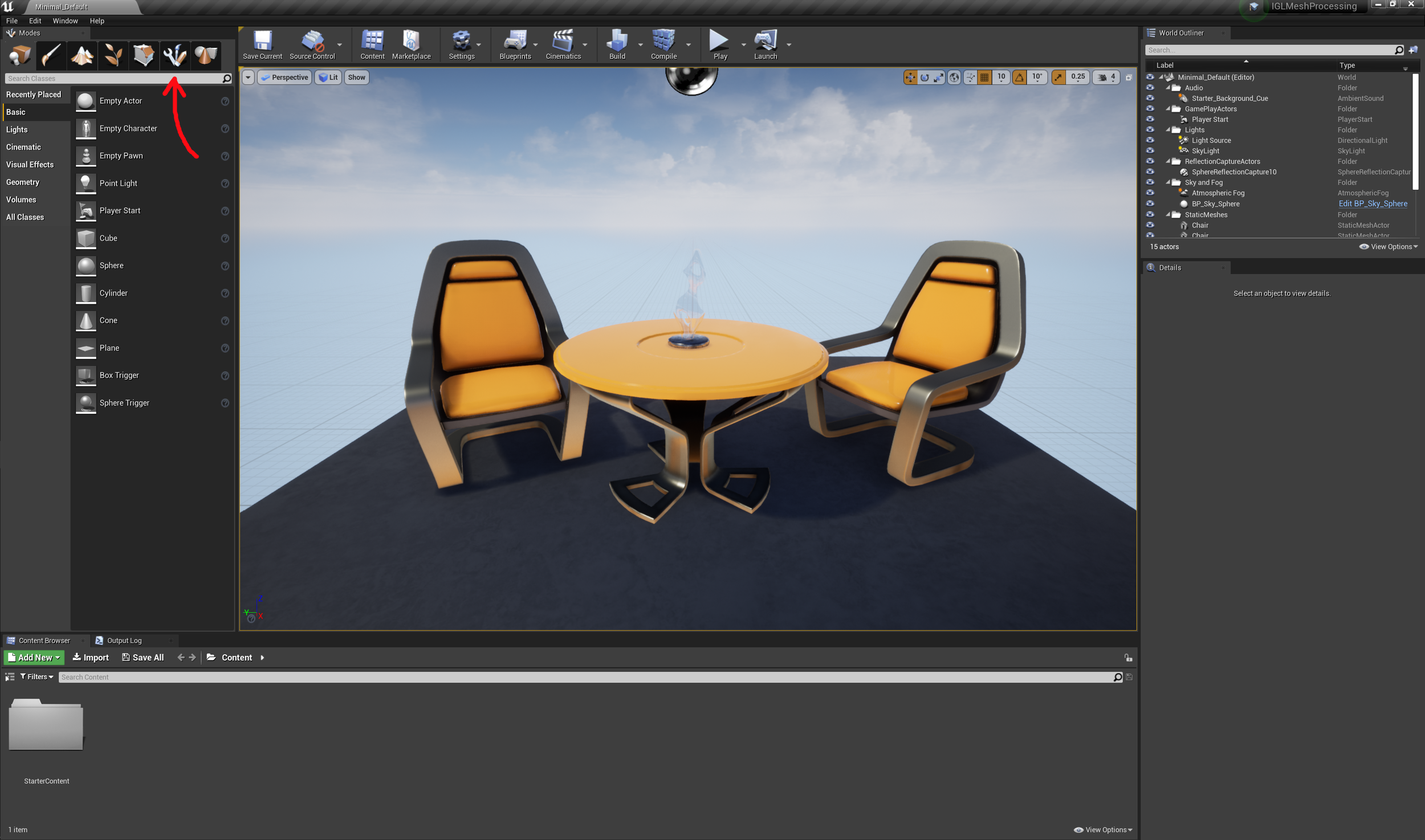

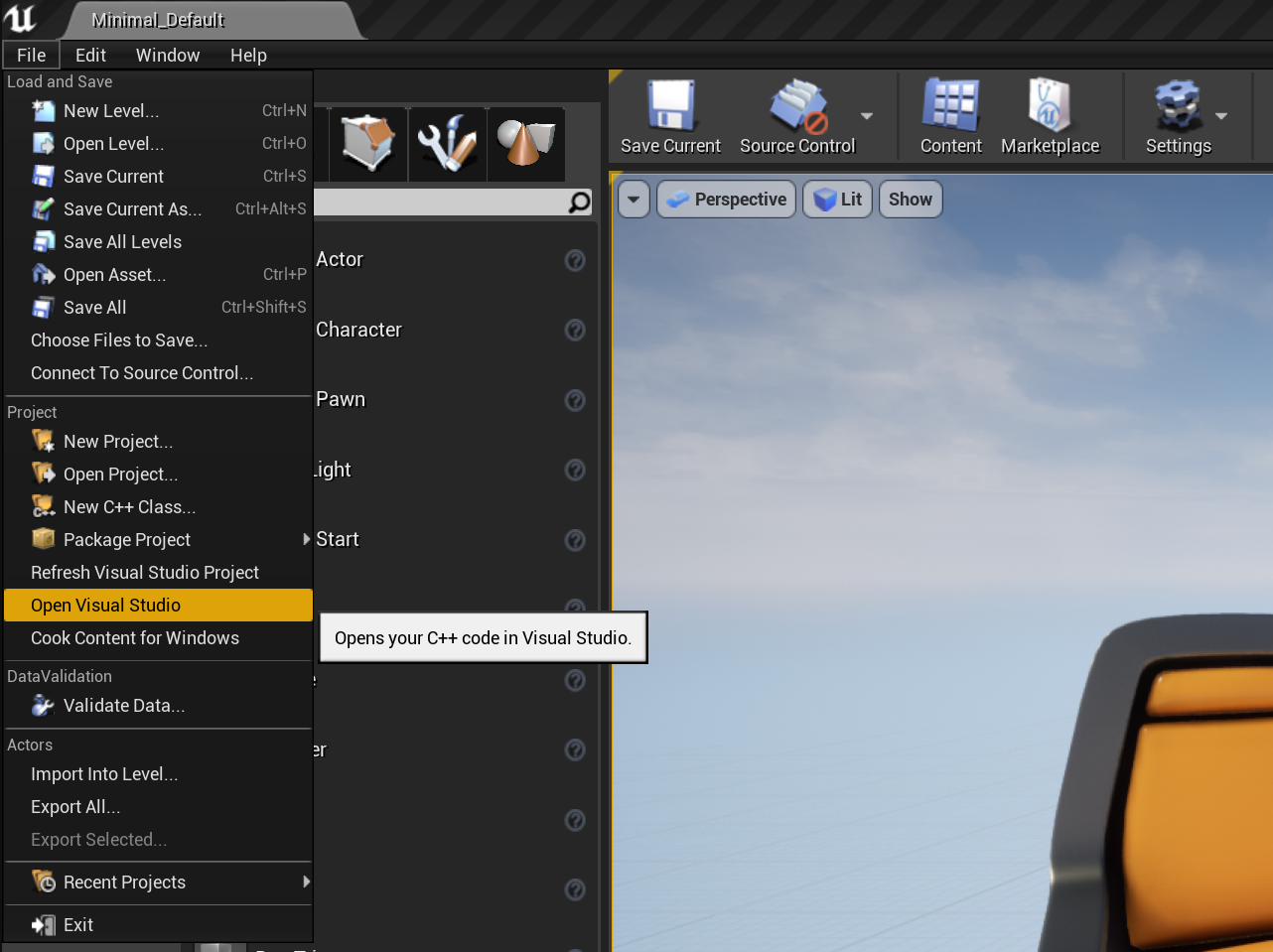

You probably don’t have the Geometry Script plugin enabled. It is not enabled by default. The first video in my Geometry Script Tutorial Playlist on Youtube shows how to turn on the Plugin.

Some functions are missing when I try to use them in my Actor Blueprint

You are likely trying to use a function that is Editor-Only. Some functions like creating new Volumes or StaticMesh/SkeletalMesh Assets, and the Catmull Clark SubD functions, are Editor-Only and can only be used in Editor Utility Actors/Actions/Widgets, or GeneratedDynamicMeshActor BP subclasses.

I can’t find the function “Event On Rebuild Generated Mesh” to override in my Actor Blueprint

This event only exists in Actor Blueprints that derive from the GeneratedDynamicMeshActor class. It’s likely you are trying to find it in a generic Actor Blueprint, or in a DynamicMeshActor Blueprint.

Does Geometry Script always run on the Game Thread?

Currently Yes. Actor Blueprints and Editor Utility Blueprints are always executed on the Game Thread, and so the Geometry Script functions that are called also run on the Game Thread. Some Geometry Script functions will internally execute portions of their work on task threads, eg via C++ calls to ParallelFor, Async, or UE::Tasks::Launch(). However this will only occur in the context of a single function, and the function will not return until all that parallel work is completed.

Can I run GeometryScript functions in a background thread or asynchronously?

It is generally considered to be not safe to modify any UObject in a background thread. Geometry Script functions modify a UDynamicMesh, which is a UObject, and technically it is possible for a UObject to become unreferenced and garbage-collected at any time.

However, if in your specific use case you know that the UObject will not become unreferenced, then most(***) Geometry Script functions can safely be run in a background thread, as long as you don’t try to edit the same mesh from multiple threads. In my article on Modeling Mode Extension Plugins, I demonstrated taking advantage of this to build interactive mesh editing tools using Geometry Script that compute the mesh edit asynchronously.

The (***) above is because any Geometry Script function that touches an Asset, Component, or Actor (eg has any of those as input) cannot safely be run asynchronously.

Is there any built-in support for running Geometry Script functions asynchronously?

No, as of 5.1 there is not.

Can I run a Geometry Script Blueprint on the GPU?

No, this is not possible and won’t ever be. Geometry Script is a thin wrapper around a large C++ library of mesh processing algorithms and data structures. General-purpose C++ code cannot be run directly on a GPU. In addition, many of the mesh processing operations exposed in Geometry Script, like Mesh Booleans, involve complex queries and modifications over unstructured graphs where dynamic memory allocations are generally involved, which is the kind of computation problem that CPUs are much better at than GPUs.

Does Geometry Script work with Skeletal Meshes?

In 5.1 there is limited support for from a SkeletalMesh to a DynamicMesh and back, similar to the StaticMesh approach. However, how to automatically generate or update skin weights after complex mesh edits basically remains an unsolved problem in animation, and so procedural mesh edits done this way likely will not result in desirable skin weights.

Does Geometry Script work with Landscape, Geometry Caches, Geometry Collections, Hair/Grooms, Cloth Meshes, or some other UE Geometry Representation?

Not in UE 5.1. Nearly all Geometry Script functions only work with UDynamicMesh objects. There are functions to convert the internal mesh representations from Static and Skeletal Meshes, and Volume Actors, into a UDynamicMesh, and then functions to convert back. No such functions currently exist for these other geometry types.

Is Geometry Script Deterministic? Are scripts likely to break in the future?

Most functions in Geometry Script are deterministic. Several are not, however - in particular mesh simplification and remeshing functions currently may not produce the same mesh every time. In general, it is difficult to provide hard determinism and compatibility guarantees in procedural mesh generation systems, as things that are clear bugs or performance issues can change the result mesh when they are fixed/resolved. Deterministic versions of operations may also be slower, as in some cases the most efficient parallel-processing implementation produces non-determinism. Operations like a Mesh Boolean can have a huge dependency tree of geometric operations, and any change to one of them might affect the result. So the only way to ensure deterministic compatibility is to keep the “old” version of the code around, bloating the binary size (this is what CAD software generally does to ensure compatibility between versions).

Can I animate a mesh with Geometry Scripting? Can I implement my own skinning or vertex deformation?

This is technically possible, either by fully regenerating the mesh every frame, or by (for example) using Geometry Script to update the vertex positions of a DynamicMesh every frame. However, this is not a very efficient way to implement animation, and except for very simple examples (eg say a simple primitive shapes, basic mesh booleans, etc) is unlikely to provide acceptable runtime performance. Each time the DynamicMesh is regenerated or updated, a series of relatively expensive operations occur, including generating new vertex and index buffers and uploading them to the GPU (this GPU upload is often the most expensive part). Skinning in something like a SkeletalMesh is computed directly on the GPU and so is much, much faster.

However if you don’t need to update the deformation every frame, or don’t need realtime performance (ie for experimental or research purposes), Geometry Scripting may work for you. It is possible to (eg) iterate over all mesh vertices and update their positions, even for meshes with millions of vertices.

Runtime Usage

Can I use Geometry Script in my Game / At Runtime?

Mostly Yes. Some Geometry Script functions are Editor-Only, but the majority work at Runtime. Notable things that do not work at Runtime include creating new Volume actors, creating/updating Static or Skeletal meshes, and Catmull Clark SubD.

Should I use DynamicMeshActors generated with Geometry Script in my Game?

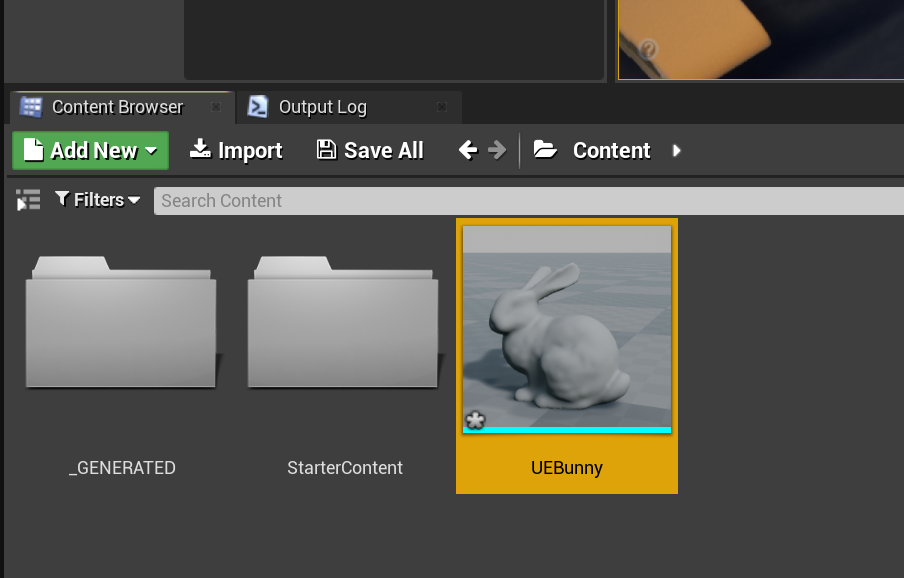

If your meshes are static in the Game, IE you just want the convenience of procedural mesh generation for in-Editor Authoring, then the answer is probably no. DynamicMeshComponent (the component underlying DynamicMeshActor) is relatively expensive compared to StaticMeshComponent (see below). You should almost certainly “bake” any generated DynamicMeshActors into StaticMesh/Component/Actors, that’s what we did in the Lyra sample game. I have a short tutorial video on doing so here.

If your meshes are dynamic, ie they need to be dynamically generated at Runtime, or modified in-Game, then the answer is probably yes. There are various other options like ProceduralMeshComponent, the third-party RuntimeMeshComponent which is quite good, and runtime-generated StaticMesh Assets. However none of these options has an internal UDynamicMesh that can be directly edited with Geometry Script.

Will DynamicMeshComponent be as efficient as StaticMeshComponent in my Game?

No. DynamicMeshComponent uses the Dynamic Draw Path instead of the Static Draw Path, which has more per-frame rendering overhead (there is a GDC talk on YouTube about the Unreal Engine Rendering Pipeline by Marcus Wassmer which explains the Static Draw Path optimizations for Static Meshes). DynamicMeshComponent does not support instanced rendering, so mesh memory usage is generally higher. And the GPU index/vertex buffers created by a DynamicMeshComponent are not as optimized as those in a cooked StaticMesh asset (really, really not as optimized).

In addition, the DynamicMeshComponent always keeps the UDynamicMesh memory available on the CPU - a cooked StaticMesh Asset usually does not. The FDynamicMesh3 class underlying UDynamicMesh also has a minimum size and grows in fixed “chunks” of memory, so (eg) a one-triangle DynamicMesh will consume quite a bit more memory than a comparable one-triangle StaticMesh’s FMeshDescription would.

Why are all my GeneratedDynamicMeshActors disappearing in PIE or my built game ?!?

GeneratedDynamicMeshActor is an Editor-Only subclass of DynamicMeshActor, meant for in-Editor procedural mesh generation. GeneratedDynamicMeshActor’s convenient “rebuild” system is usually not appropriate in a game context, where you likely need to more precisely manage when meshes are generated/etc. I have a short tutorial video here on how to set up a DynamicMeshActor that can be generated/edited at Runtime.

Is GeometryScript function X fast enough to use in my game?

Since Geometry Script works on Meshes, which have a variable number of triangles and vertices, the only answer anyone can ever give to this question is “you will have to try it and profile”. Any non-trivial function in Geometry Script is at least linear in the number of vertices/triangles, and many are more complex. For example the Mesh Boolean node must build an AABBTree for each of the two input meshes, then do relatively expensive pairwise-triangle intersection tests (based on the AABBTree traversal, which efficiently skips most non-intersections). If you have two basic cubes, this is quite fast. If you try to subtract a cube every frame, the mesh will accumulate more and more triangles over time, and the Boolean will become increasingly expensive.

How can I save/load Dynamic Meshes at Runtime?

Unreal Engine doesn’t provide any built-in mesh load/save system at Runtime. You would have to implement this yourself in C++. There is a system called Datasmith Runtime which can load various mesh formats at Runtime but this is not part of Geometry Scripting.

Can I use Geometry Script to modify Static Meshes in my Level at Runtime?

No, this is not possible. Static Mesh Assets you created in the Editor and placed in a level are “cooked” when you create your game executable. It is not possible to update a cooked asset at Runtime, the mesh data has been converted to optimized GPU index and vertex buffers.

The function “Copy Mesh From Static Mesh” is not working at Runtime

Try checking the Output Log, you will likely see that there are warning messages about the “Allow CPU Access” flag on the Static Mesh Asset. You must enable this flag in the Editor to be able to access the StaticMesh index and vertex buffers on the CPU at Runtime. Note that this will increase memory usage for the Asset.

The Mesh I get when using “Copy Mesh From Static Mesh” at Runtime is different than the mesh I get in the Editor

In the Editor, by default CopyMeshFromStaticMesh will access the “Source” mesh, however at Runtime the Source mesh is not available. Even with the Allow CPU Access flag enabled, at Runtime only the “cooked” index and vertex buffers that will be passed to the GPU are available on the CPU. This representation of the mesh does not support “shared” or “split” UVs or normals, the mesh will be split along any UV and hard-normal seams. So what would be a closed solid cube in the Editor will become 6 disconnected rectangles in the index/vertex buffer representation. This is problematic for many mesh modeling operations. You can in many cases use the Weld Mesh Edges function to merge the mesh back together at the added seams, however this may introduce other problems, and very small triangles may have been fully discarded.

The functions “Apply Displace from Texture Map” and/or “Sample Texture2D at UV Positions” are working in the Editor but not at Runtime

Try checking the Output Log, you will likely see warning messages about Texture Compression. In their cooked representation, Texture2D Assets are generally compressed in formats that can only be efficiently decompressed on the GPU, and so are not readable in Geometry Script. The VectorDisplacementMap texture compression mode in the Texture2D Asset is effectively uncompressed RGBA8, so you must configure a Texture asset with this compression mode in the Editor for it to be readable at Runtime.

Rendering and UE Features

Does DynamicMeshComponent support Nanite, Lumen, or Mesh Distance Fields?

As of 5.1, no.

Does DynamicMeshComponent work with Runtime Virtual Texturing (RVT)?

As of 5.1, no. RVT requires usage of the Static Draw Path, and DynamicMeshComponent uses the Dynamic Draw Path

Does DynamicMeshComponent support Physics / Collision?

Yes. DynamicMeshComponent supports both Complex and Simple collision, similar to StaticMesh. The Collision settings and geometry are stored on the DynamicMeshComponent, not the DynamicMesh directly (unlike how they are stored on a StaticMesh Asset). So, to set collision geometry or change settings, you must call functions on the Component, not on the DynamicMesh.

DynamicMeshComponents don’t show up in Collision Debug Views!

This is currently not supported in UE 5.1

Does DynamicMeshComponent support LODs?

No.

Does DynamicMeshComponent support Instanced Rendering?

As of 5.1, no.

Lyra Sample Game

How does the Non-Destructive Level Design with GeometryScript-based mesh generator “Tool” objects work in Lyra?

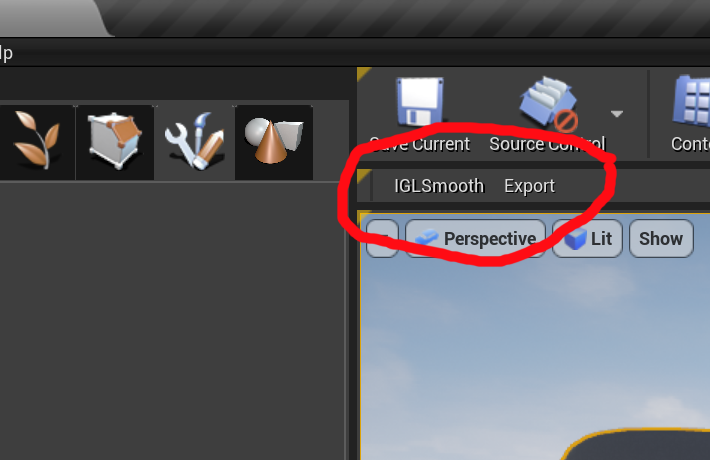

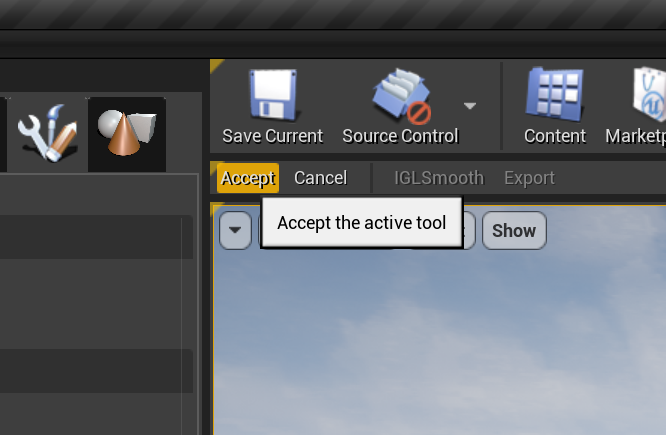

I wrote extensive documentation for the Lyra Tool system here: https://docs.unrealengine.com/5.0/en-US/lyra-geometry-tools-in-unreal-engine/. The basic principle is that StaticMeshComponents are linked with a GeneratedDynamicMeshActor “Tool” mesh generator which still exists in the level (they are “stored” under the map). A helper Actor called the “Cold Storage” is used to maintain links between the Tool instance and it’s Components. Each Tool must be associated with a single StaticMesh Asset.

How can I migrate the Lyra Tool system to my own project?

This is somewhat complex, and the simplest route would be to migrate your content in a copy of the Lyra game. However several users have figured out how to migrate from Lyra to your own project. This YouTube tutorial by BuildGamesWithJohn is one that I believe will work, and other users have reported that this tutorial by JohnnyTriesVR will also work.